Will AI Lead To The Legal Singularity???

The law is sometimes presented as a monolithic construct. Fixed and (relatively) immovable. “The Rule of Law.” “The Letter of the Law,” and so forth.

But like any man-made construct, it is made and managed by people. And the result is countless variations in interpretation and application of the law. Is Artificial Intelligence about to change all that?

In recent years, there has been increasing discussion about the “Legal Singularity” — a state where uncertainty about the law is removed. It’s been suggested that this, in turn, would bring about more equity and fairness, along with greater transparency and accessibility to justice. Last year, Blue Jay X co-founder Benjamin Alarie gave a TEDx talk suggesting that the result would even a reinvented social order.

Earlier this month, the topic was raised again at FutureLaw 2019, hosted by CodeX, Stanford Law School’s Center for Legal Informatics, to discuss ways technology is changing the legal profession and the law itself.

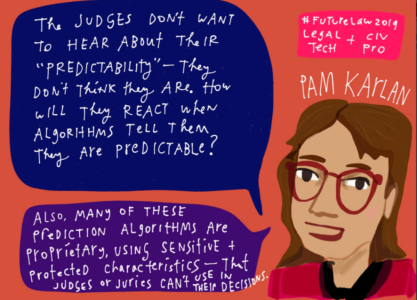

A panel discussion looked at whether Artificial Intelligence has the potential to remove uncertainty in the law to the point where legal decisions become perfectly predictable, and if so, what the implications would be.

Stanford Law School Professor and Associate Dean for Strategic Initiatives David Freeman Engstrom,.moderated the panel of Hon. Lee Rosenthal, Chief Judge of the United States District Court for the Southern District of Texas; Pamela Karlan, Co-Director of Stanford Law School’s Supreme Court Litigation Clinic and Professor of Law; Elizabeth Cabraser, Partner at Lieff Cabraser Heimann & Bernstein; and Jonah Gelbach, Professor of Law at University of Pennsylvania Law School.

The members of the panel talked about when and how AI is useful, how it may change the practice of law, and the moral issues involved.

“At what level do I trust machines on degree of believability, plausibility, reliability, all things that humans learn through years of social experience, and does this vary based on questions of fact (“the light was green!”) and questions of expertise (“I am a scientist”)?” said Rosenthal.

“At what level do I trust machines on degree of believability, plausibility, reliability, all things that humans learn through years of social experience, and does this vary based on questions of fact (“the light was green!”) and questions of expertise (“I am a scientist”)?” said Rosenthal.

“Judges don’t want to be told how we think they will rule,” said Karlan, on the issue of predicting judicial decisions. “The integrity of the judiciary is already poisoned by the administration’s appointment of judges. If algorithms add on to that, we are in trouble.”

A video of the hour-long panel discussion was posted by CodeX.